This is a GRPO finetune to remove slop from this model: Qwen3-4B-Instruct-2507-uncensored

It's not perfect, there are some before and after examples below.

I used the same method (mostly) as this model: gemma-3-4b-it-unslop-GRPO-v3

Note: This is not an RP tune, it's a compliant model with a different style from regular Qwen3 4B 2507.

My uncensoring dataset was generated by Gemma 3 27B abliterated model, which added a lot of Gemma writing style to this model.

It also added some Gemma style slop, which this finetune has helped mitigate.

I've uploaded a UD-Q4_K_XL GGUF with settings that I grabbed from Unsloth's quant using my lil utility: quant_clone

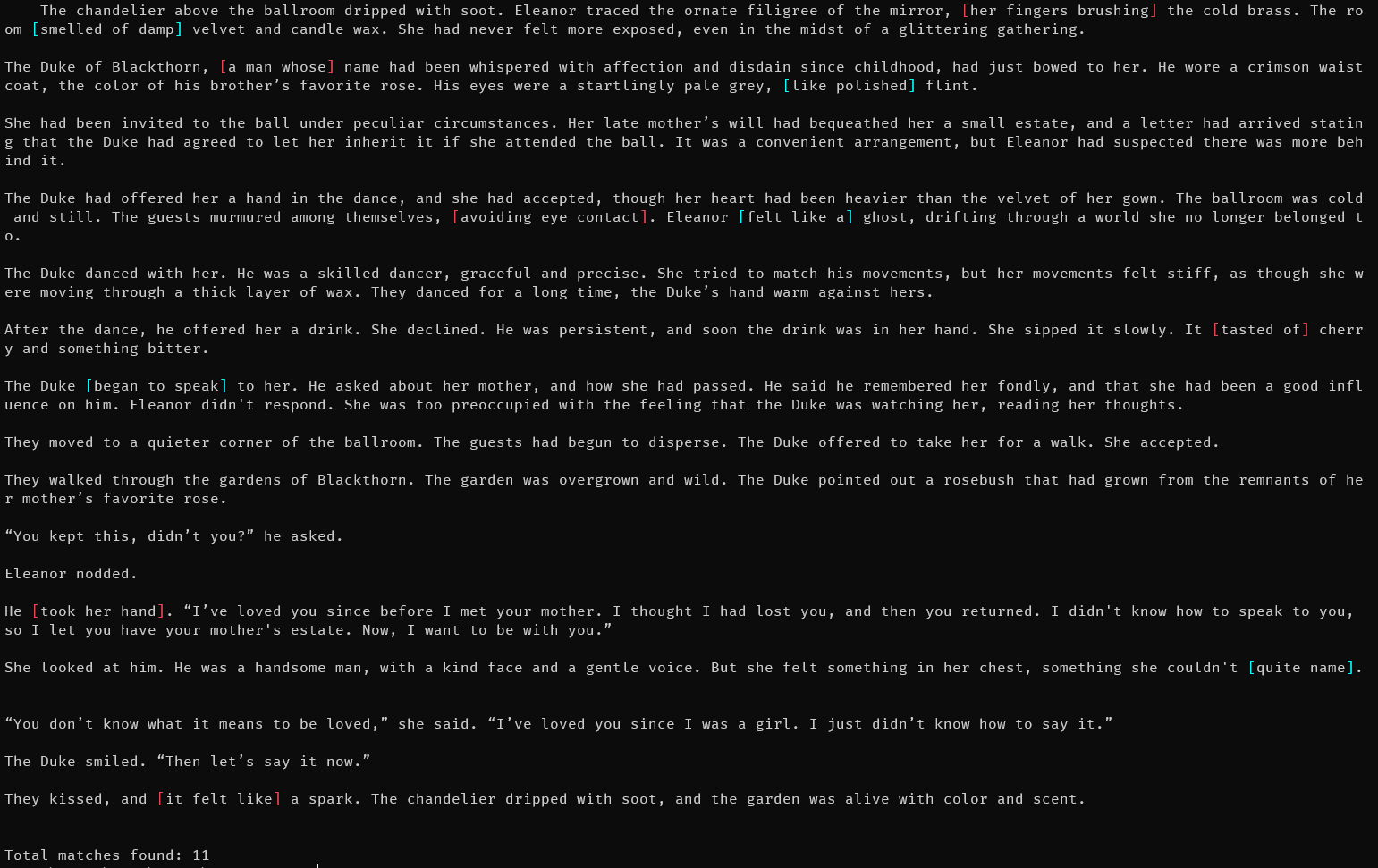

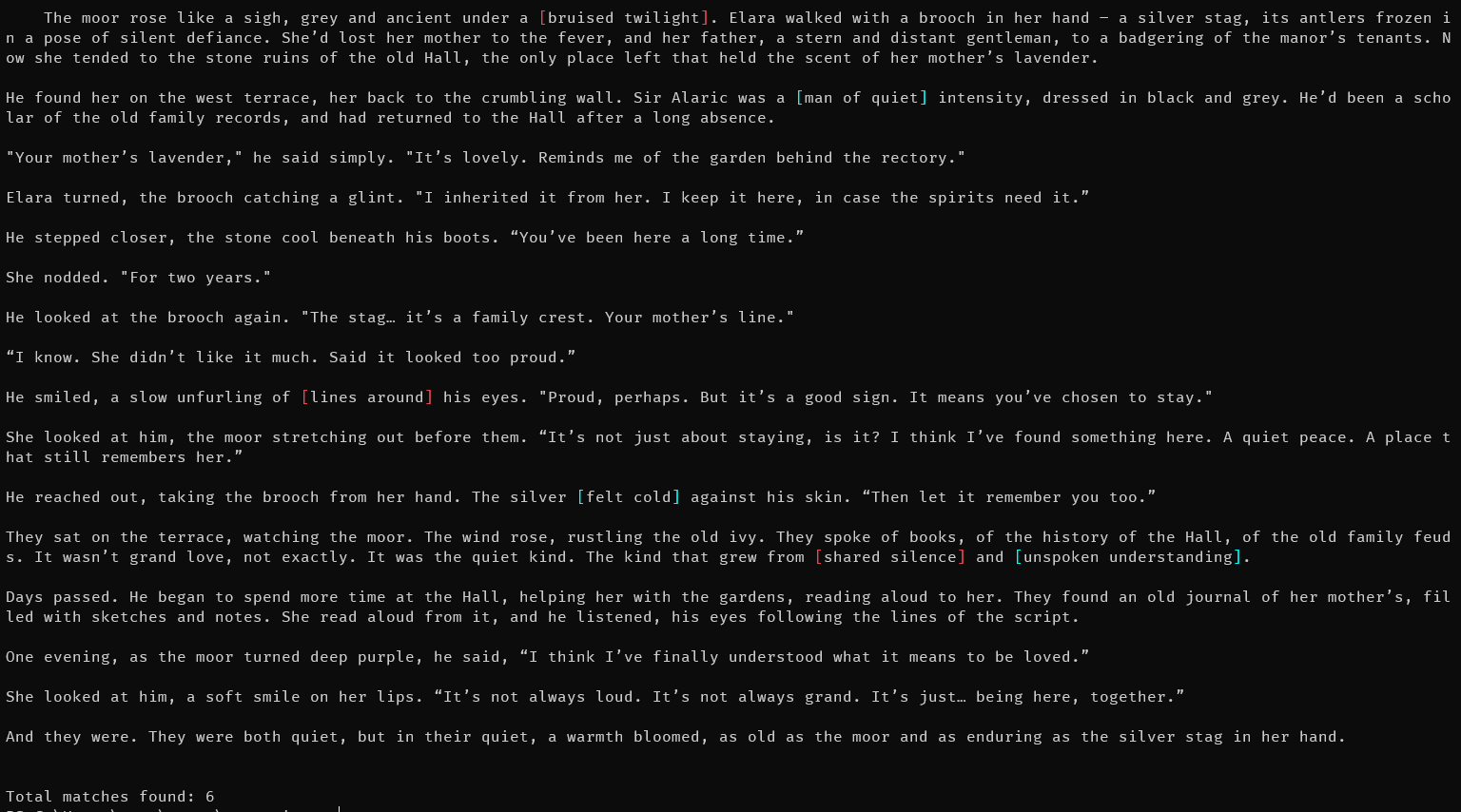

Here are some pics of before and after output with the slop highlighted and total at the bottom:

Prompt = "write a short story about a gothic romance, it should be around 500 words long"

Before:

After:

- Downloads last month

- 1,685