language: ary

language_name: Moroccan Arabic

language_family: arabic

tags:

- wikilangs

- nlp

- tokenizer

- embeddings

- n-gram

- markov

- wikipedia

- feature-extraction

- sentence-similarity

- tokenization

- n-grams

- markov-chain

- text-mining

- fasttext

- babelvec

- vocabulous

- vocabulary

- monolingual

- family-arabic

license: mit

library_name: wikilangs

pipeline_tag: text-generation

datasets:

- omarkamali/wikipedia-monthly

dataset_info:

name: wikipedia-monthly

description: Monthly snapshots of Wikipedia articles across 300+ languages

metrics:

- name: best_compression_ratio

type: compression

value: 4.171

- name: best_isotropy

type: isotropy

value: 0.8284

- name: vocabulary_size

type: vocab

value: 0

generated: 2026-01-03T00:00:00.000Z

Moroccan Arabic - Wikilangs Models

Comprehensive Research Report & Full Ablation Study

This repository contains NLP models trained and evaluated by Wikilangs, specifically on Moroccan Arabic Wikipedia data. We analyze tokenizers, n-gram models, Markov chains, vocabulary statistics, and word embeddings.

📋 Repository Contents

Models & Assets

- Tokenizers (8k, 16k, 32k, 64k)

- N-gram models (2, 3, 4, 5-gram)

- Markov chains (context of 1, 2, 3, 4 and 5)

- Subword N-gram and Markov chains

- Embeddings in various sizes and dimensions (aligned and unaligned)

- Language Vocabulary

- Language Statistics

Analysis and Evaluation

- 1. Tokenizer Evaluation

- 2. N-gram Model Evaluation

- 3. Markov Chain Evaluation

- 4. Vocabulary Analysis

- 5. Word Embeddings Evaluation

- 6. Morphological Analysis (Experimental)

- 7. Summary & Recommendations

- Metrics Glossary

- Visualizations Index

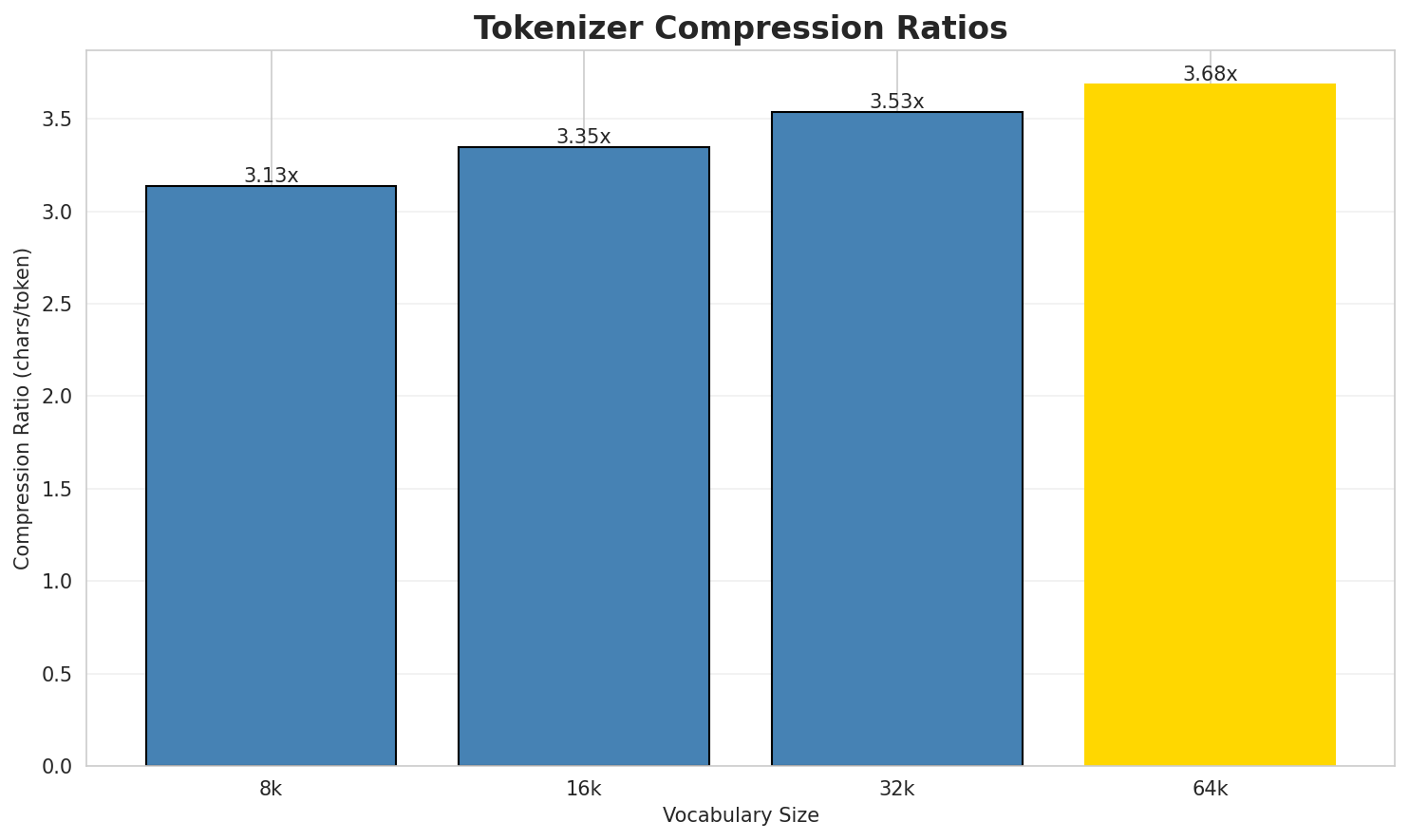

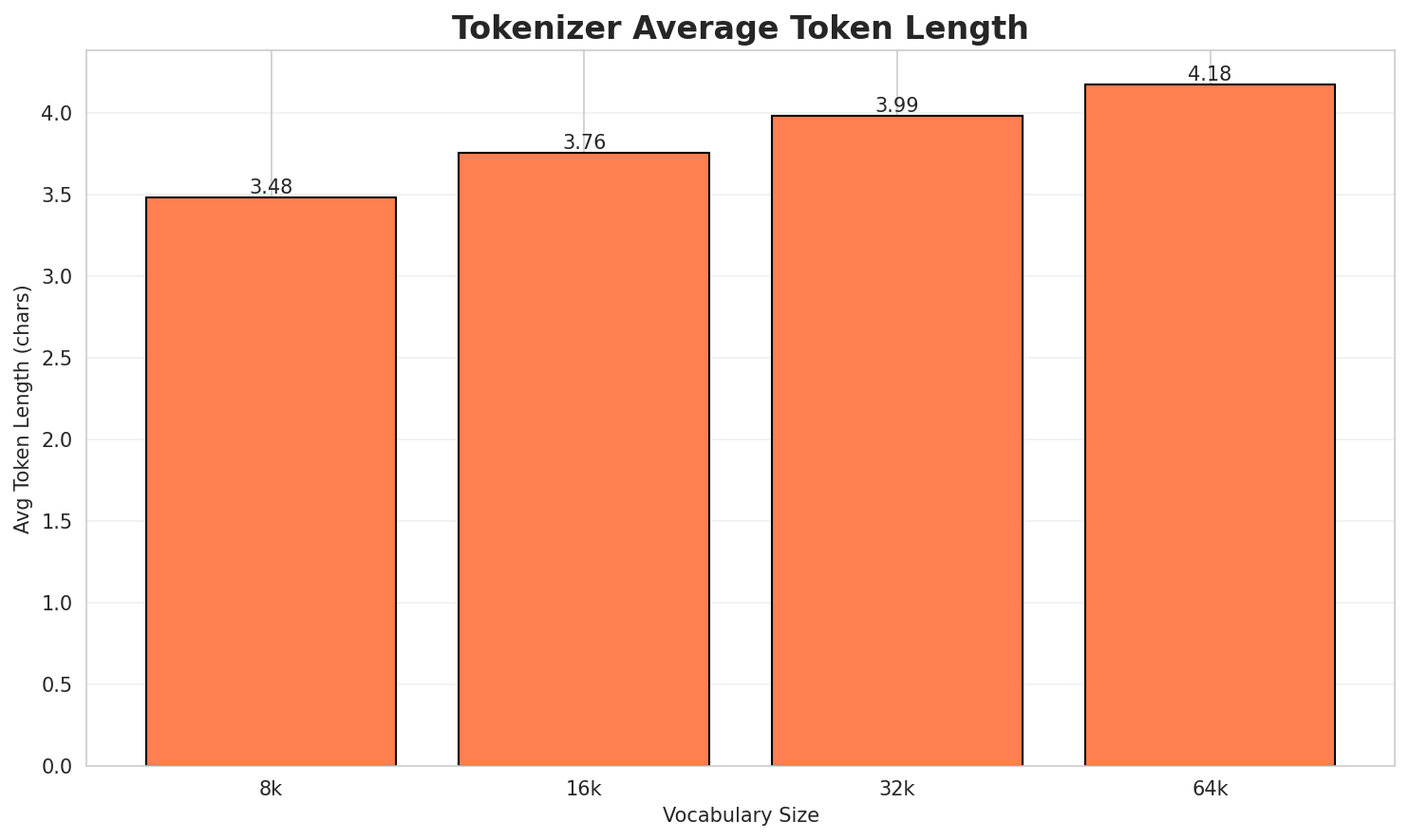

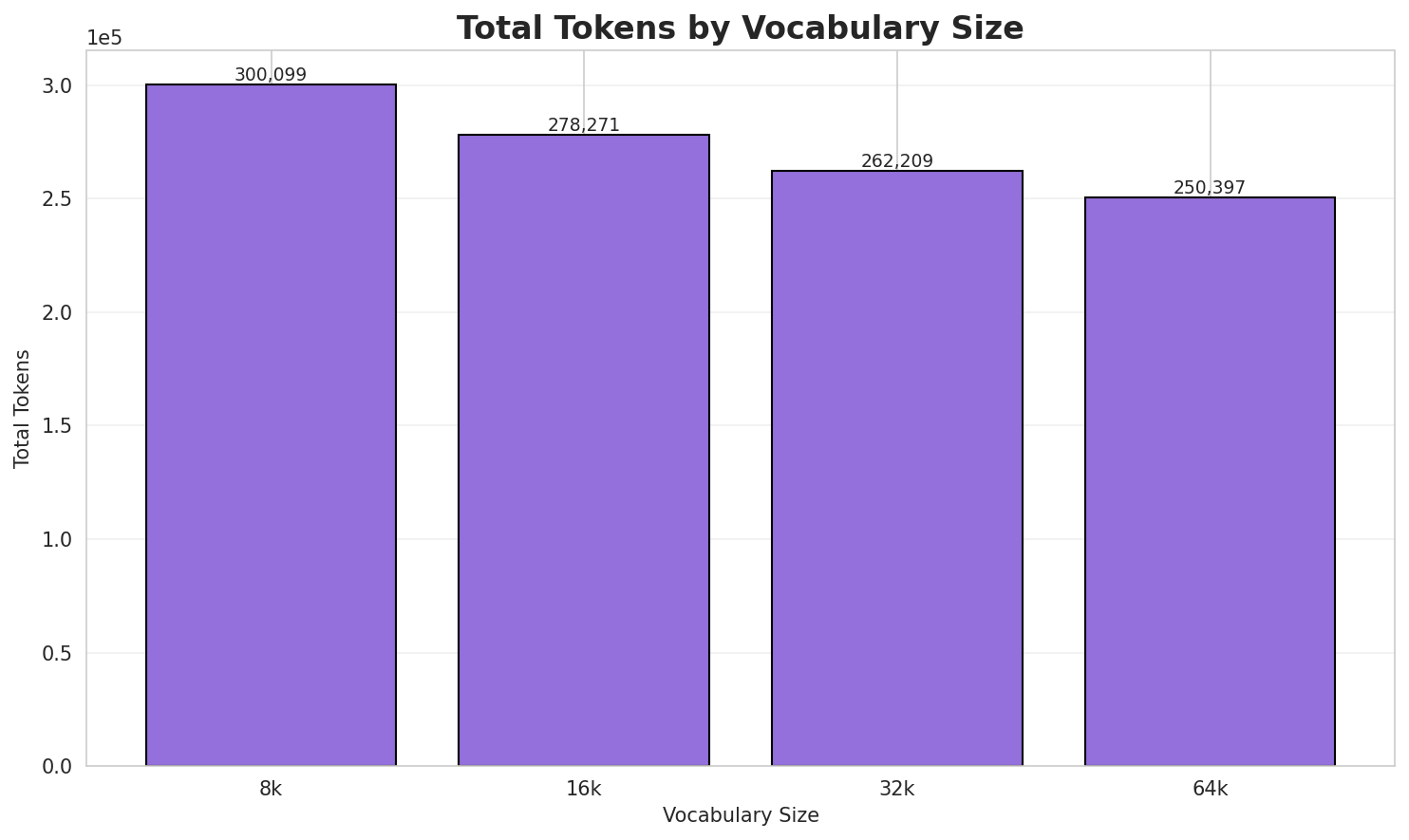

1. Tokenizer Evaluation

Results

| Vocab Size | Compression | Avg Token Len | UNK Rate | Total Tokens |

|---|---|---|---|---|

| 8k | 3.480x | 3.48 | 0.0910% | 300,099 |

| 16k | 3.753x | 3.76 | 0.0981% | 278,271 |

| 32k | 3.983x | 3.99 | 0.1041% | 262,209 |

| 64k | 4.171x 🏆 | 4.18 | 0.1090% | 250,397 |

Tokenization Examples

Below are sample sentences tokenized with each vocabulary size:

Sample 1: هادي صفحة د التوضيح، كلمة بركان يمكن يكونو عندها هاد لمعاني: بْرْكان: مدينة مغري...

| Vocab | Tokens | Count |

|---|---|---|

| 8k | ▁هادي ▁صفحة ▁د ▁التوضيح ، ▁كلمة ▁بركان ▁يمكن ▁يكونو ▁عندها ... (+23 more) |

33 |

| 16k | ▁هادي ▁صفحة ▁د ▁التوضيح ، ▁كلمة ▁بركان ▁يمكن ▁يكونو ▁عندها ... (+21 more) |

31 |

| 32k | ▁هادي ▁صفحة ▁د ▁التوضيح ، ▁كلمة ▁بركان ▁يمكن ▁يكونو ▁عندها ... (+19 more) |

29 |

| 64k | ▁هادي ▁صفحة ▁د ▁التوضيح ، ▁كلمة ▁بركان ▁يمكن ▁يكونو ▁عندها ... (+18 more) |

28 |

Sample 2: لْفزضاض ؤلا أفزضاض (سمية لعلمية Microcosmus sabatieri) حيوان لاسنسولي كيعيش ف لب...

| Vocab | Tokens | Count |

|---|---|---|

| 8k | ▁لْ ف ز ضاض ▁ؤلا ▁أف ز ضاض ▁( سمية ... (+31 more) |

41 |

| 16k | ▁لْ ف ز ضاض ▁ؤلا ▁أف ز ضاض ▁( سمية ... (+28 more) |

38 |

| 32k | ▁لْف ز ضاض ▁ؤلا ▁أف ز ضاض ▁( سمية ▁لعلمية ... (+25 more) |

35 |

| 64k | ▁لْف زضاض ▁ؤلا ▁أف زضاض ▁( سمية ▁لعلمية ▁microcos mus ... (+17 more) |

27 |

Sample 3: نيلز أبراهام لانݣليت (مزيود ف 9 يوليوز - مات ف 30 مارس هوّا عالم د شّيمي سويدي. ...

| Vocab | Tokens | Count |

|---|---|---|

| 8k | ▁نيل ز ▁أب راهام ▁ل انݣ ليت ▁( مزيود ▁ف ... (+19 more) |

29 |

| 16k | ▁نيل ز ▁أبراهام ▁ل انݣ ليت ▁( مزيود ▁ف ▁ ... (+16 more) |

26 |

| 32k | ▁نيلز ▁أبراهام ▁لانݣ ليت ▁( مزيود ▁ف ▁ 9 ▁يوليوز ... (+14 more) |

24 |

| 64k | ▁نيلز ▁أبراهام ▁لانݣليت ▁( مزيود ▁ف ▁ 9 ▁يوليوز ▁- ... (+13 more) |

23 |

Key Findings

- Best Compression: 64k achieves 4.171x compression

- Lowest UNK Rate: 8k with 0.0910% unknown tokens

- Trade-off: Larger vocabularies improve compression but increase model size

- Recommendation: 32k vocabulary provides optimal balance for production use

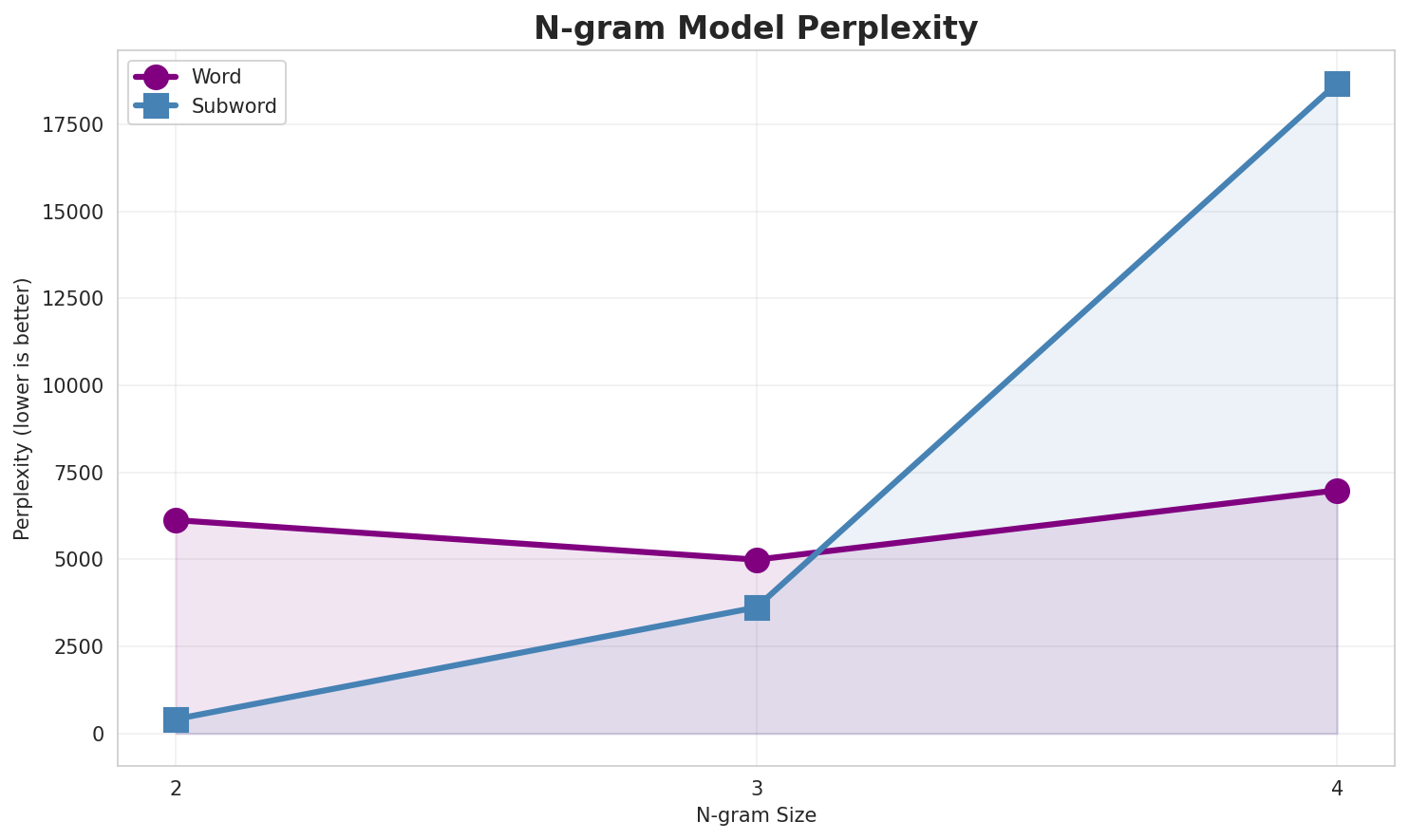

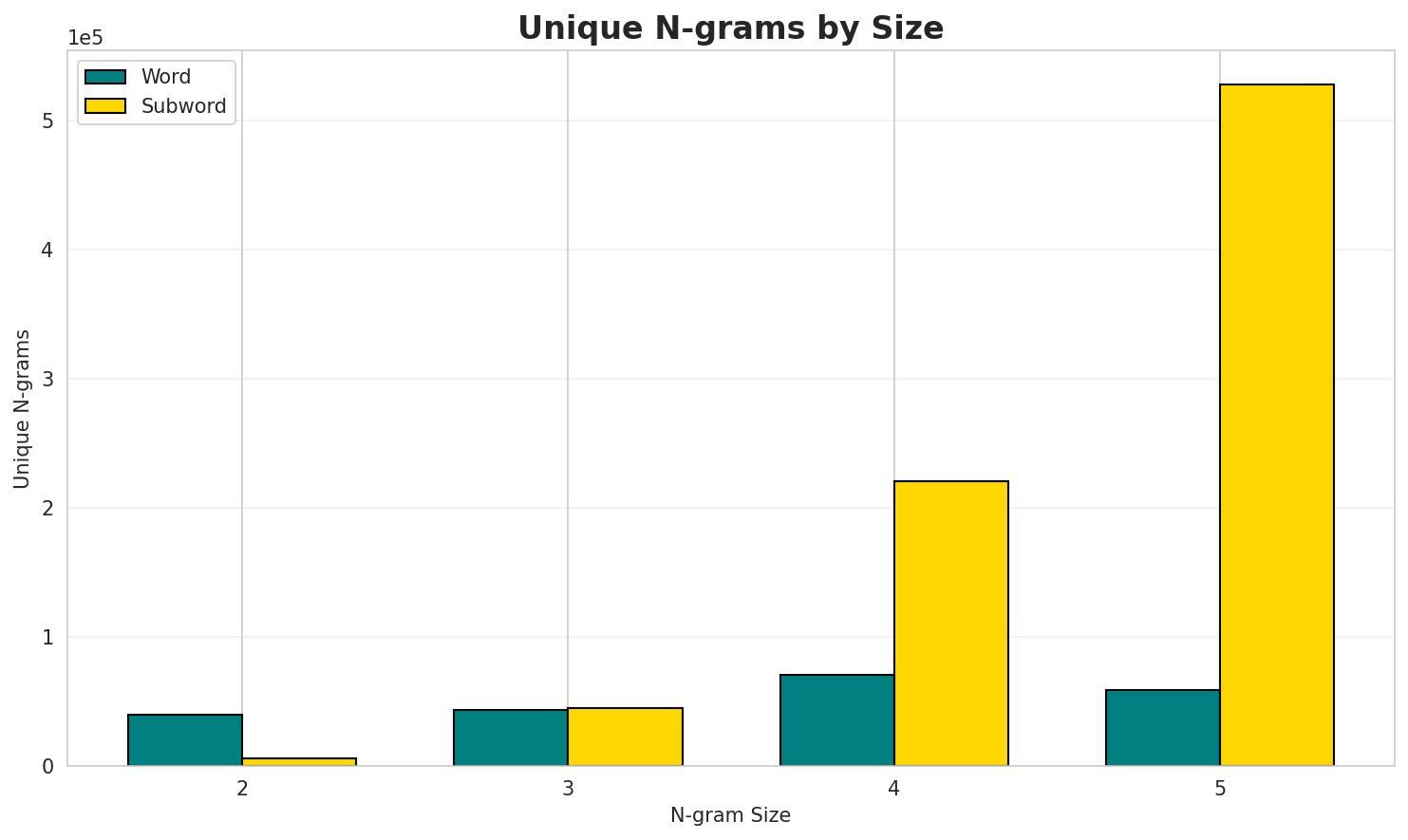

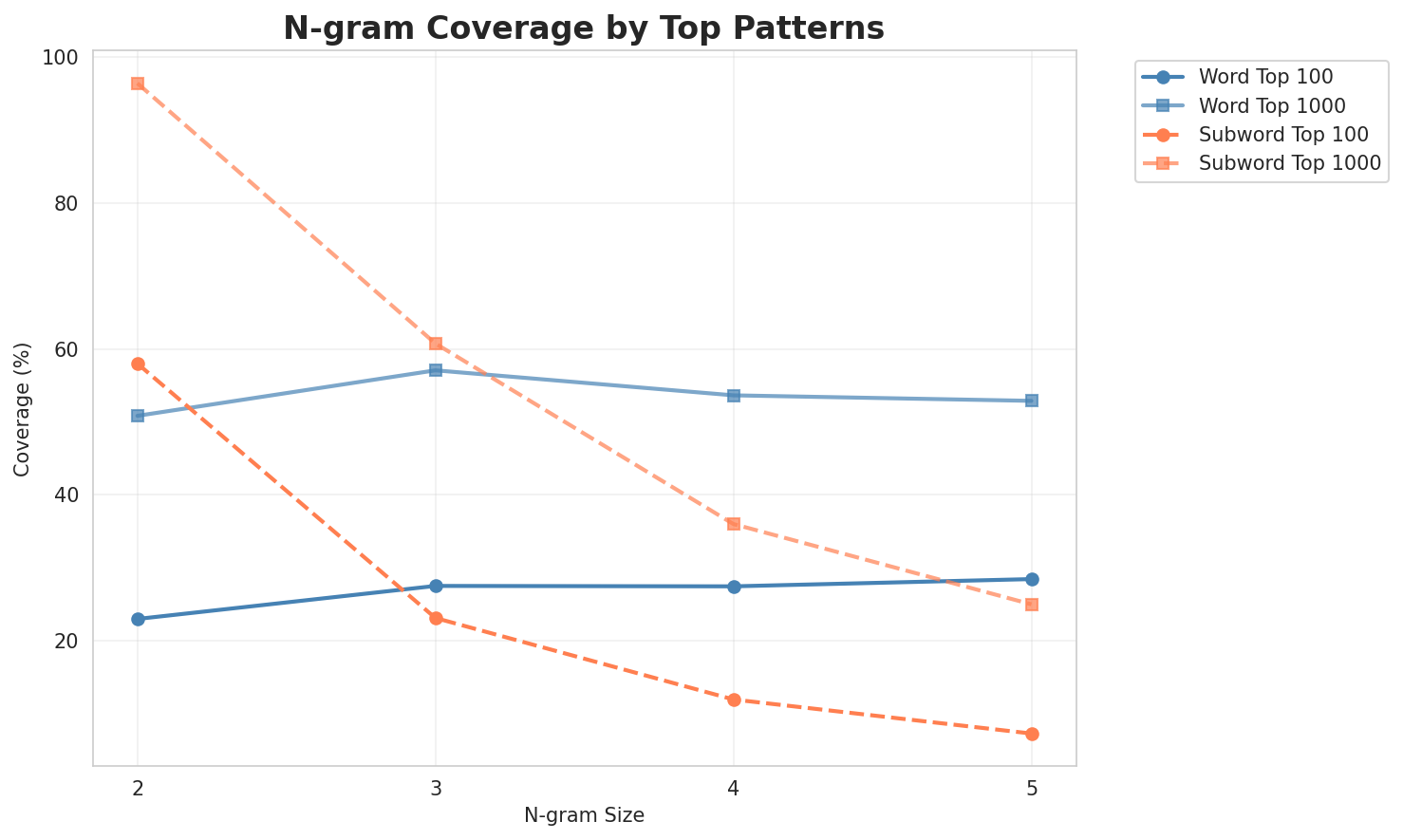

2. N-gram Model Evaluation

Results

| N-gram | Variant | Perplexity | Entropy | Unique N-grams | Top-100 Coverage | Top-1000 Coverage |

|---|---|---|---|---|---|---|

| 2-gram | Word | 7,228 | 12.82 | 39,512 | 23.0% | 50.8% |

| 2-gram | Subword | 424 🏆 | 8.73 | 5,903 | 58.0% | 96.4% |

| 3-gram | Word | 5,655 | 12.47 | 43,555 | 27.5% | 57.1% |

| 3-gram | Subword | 3,784 | 11.89 | 44,651 | 23.1% | 60.7% |

| 4-gram | Word | 7,985 | 12.96 | 70,559 | 27.5% | 53.6% |

| 4-gram | Subword | 20,064 | 14.29 | 220,807 | 12.0% | 36.0% |

| 5-gram | Word | 7,565 | 12.89 | 58,964 | 28.5% | 52.9% |

| 5-gram | Subword | 62,379 | 15.93 | 527,725 | 7.3% | 25.0% |

Top 5 N-grams by Size

2-grams (Word):

| Rank | N-gram | Count |

|---|---|---|

| 1 | واصلة ل |

8,540 |

| 2 | نسبة د |

7,170 |

| 3 | ف لمغريب |

6,305 |

| 4 | ف إقليم |

6,018 |

| 5 | ف نسبة |

4,265 |

3-grams (Word):

| Rank | N-gram | Count |

|---|---|---|

| 1 | ف نسبة د |

4,264 |

| 2 | فيها مصدر و |

3,236 |

| 3 | و نسبة د |

2,894 |

| 4 | مصدر و بايت |

2,856 |

| 5 | اللي خدامين ف |

2,760 |

4-grams (Word):

| Rank | N-gram | Count |

|---|---|---|

| 1 | فيها مصدر و بايت |

2,856 |

| 2 | نسبة نّاس اللي خدامين |

2,705 |

| 3 | نّاس اللي خدامين ف |

2,594 |

| 4 | على حساب لإحصاء الرسمي |

2,501 |

| 5 | حساب لإحصاء الرسمي د |

2,500 |

5-grams (Word):

| Rank | N-gram | Count |

|---|---|---|

| 1 | نسبة نّاس اللي خدامين ف |

2,593 |

| 2 | ف لمغريب هاد دّوار كينتامي |

2,500 |

| 3 | هاد دّوار كينتامي ل مشيخة |

2,500 |

| 4 | لمغريب هاد دّوار كينتامي ل |

2,500 |

| 5 | حساب لإحصاء الرسمي د عام |

2,500 |

2-grams (Subword):

| Rank | N-gram | Count |

|---|---|---|

| 1 | ا ل |

347,466 |

| 2 | _ ل |

278,371 |

| 3 | ة _ |

229,442 |

| 4 | _ ا |

220,960 |

| 5 | _ م |

156,801 |

3-grams (Subword):

| Rank | N-gram | Count |

|---|---|---|

| 1 | _ ا ل |

216,048 |

| 2 | _ ف _ |

83,146 |

| 3 | ا ت _ |

63,800 |

| 4 | ي ة _ |

60,271 |

| 5 | _ د _ |

59,563 |

4-grams (Subword):

| Rank | N-gram | Count |

|---|---|---|

| 1 | _ د ي ا |

47,798 |

| 2 | د ي ا ل |

47,559 |

| 3 | ي ا ل _ |

33,039 |

| 4 | د _ ا ل |

32,831 |

| 5 | _ م ن _ |

28,909 |

5-grams (Subword):

| Rank | N-gram | Count |

|---|---|---|

| 1 | _ د ي ا ل |

47,427 |

| 2 | د ي ا ل _ |

32,608 |

| 3 | _ ع ل ى _ |

19,473 |

| 4 | _ ا ل ل ي |

18,967 |

| 5 | ا ل ل ي _ |

18,744 |

Key Findings

- Best Perplexity: 2-gram (subword) with 424

- Entropy Trend: Decreases with larger n-grams (more predictable)

- Coverage: Top-1000 patterns cover ~25% of corpus

- Recommendation: 4-gram or 5-gram for best predictive performance

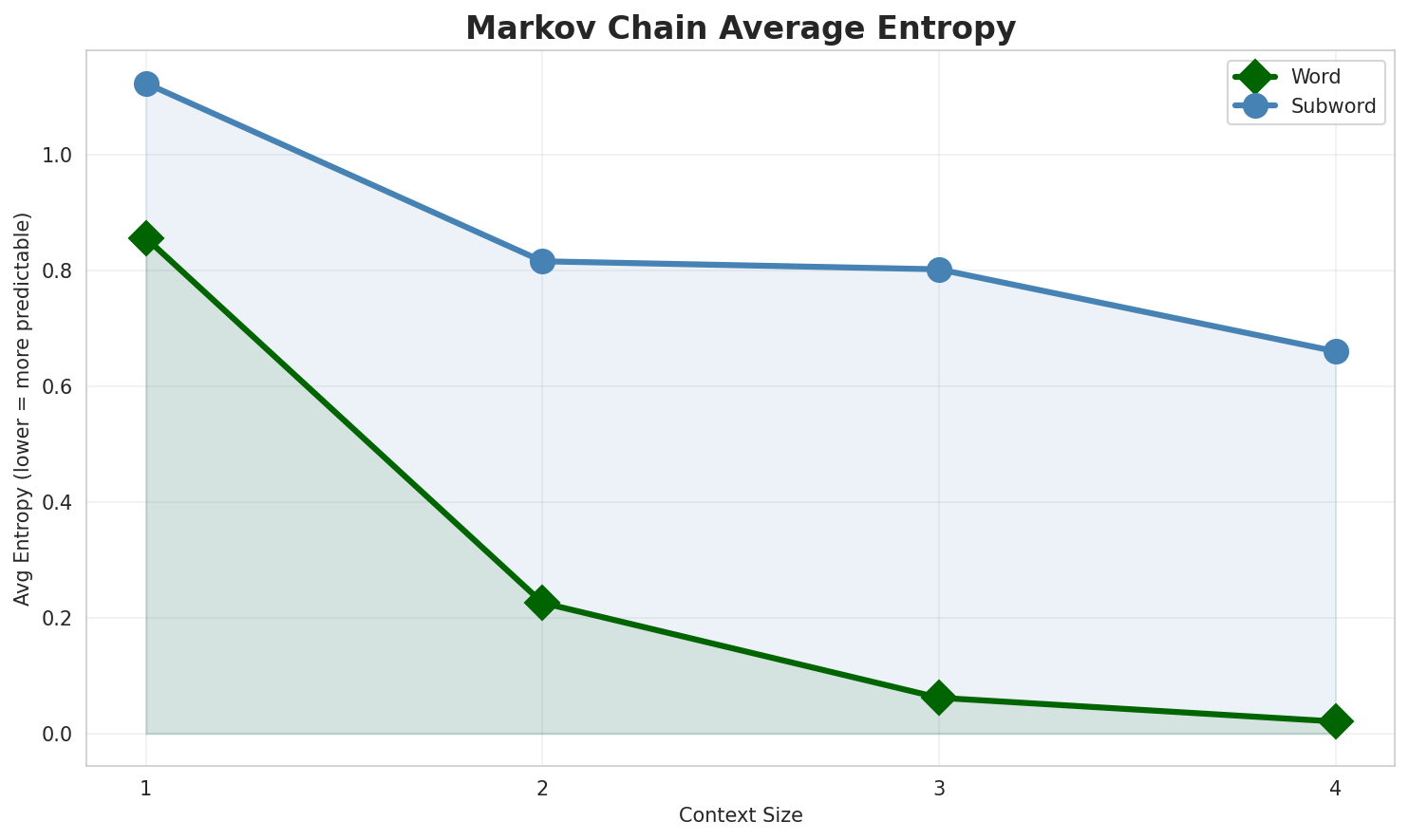

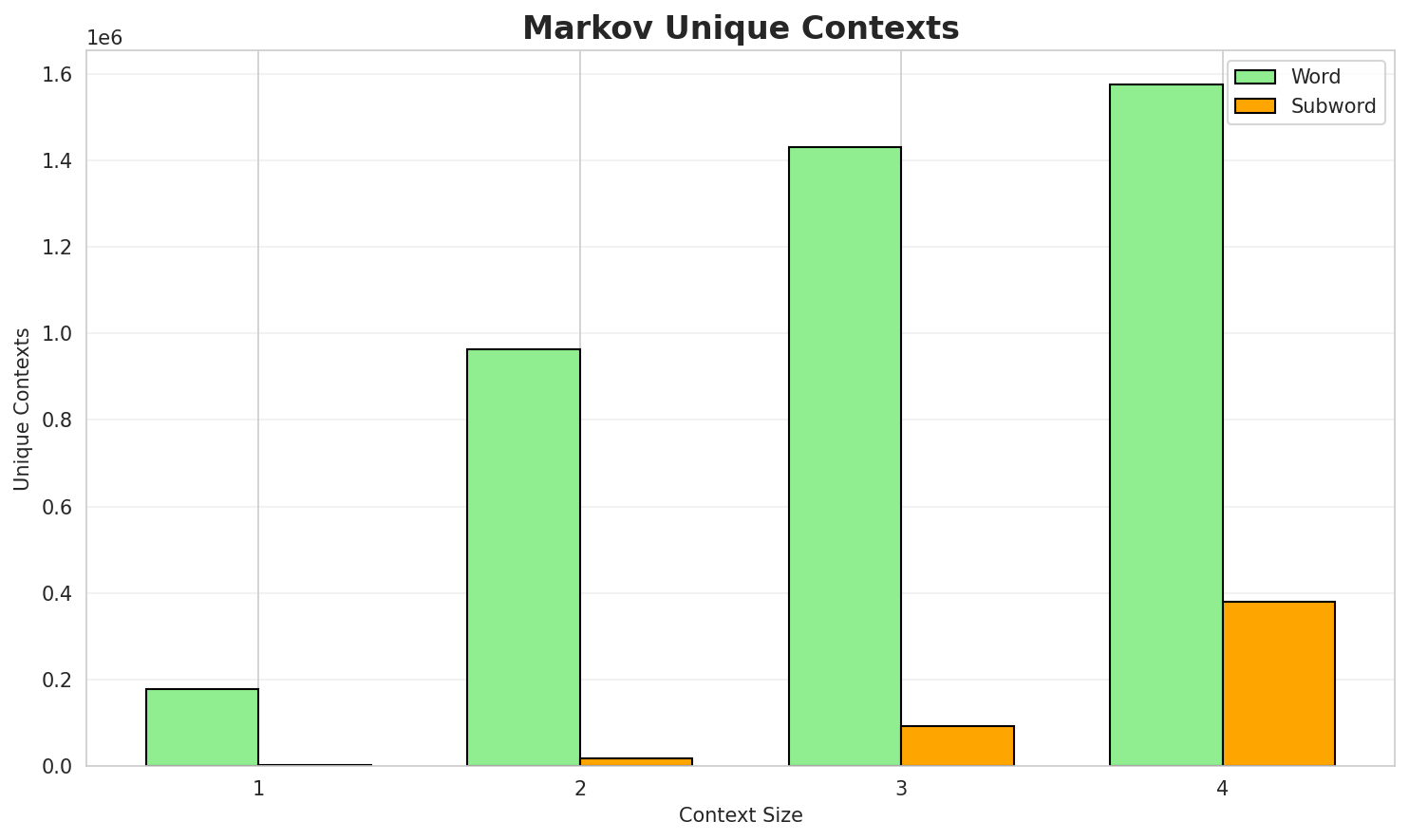

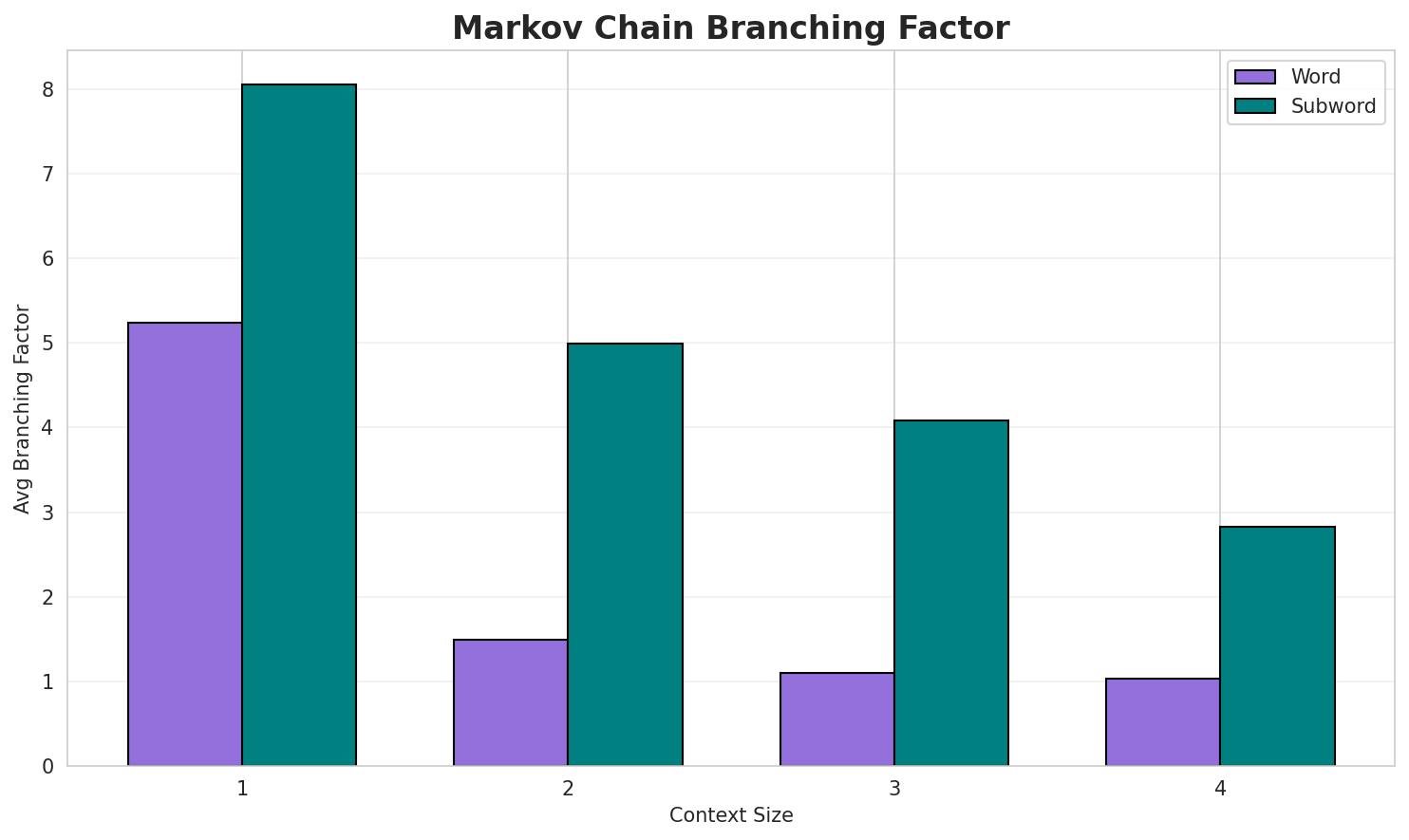

3. Markov Chain Evaluation

Results

| Context | Variant | Avg Entropy | Perplexity | Branching Factor | Unique Contexts | Predictability |

|---|---|---|---|---|---|---|

| 1 | Word | 0.8561 | 1.810 | 5.38 | 178,865 | 14.4% |

| 1 | Subword | 1.1236 | 2.179 | 8.36 | 2,156 | 0.0% |

| 2 | Word | 0.2259 | 1.169 | 1.49 | 962,233 | 77.4% |

| 2 | Subword | 0.8160 | 1.761 | 5.10 | 18,029 | 18.4% |

| 3 | Word | 0.0618 | 1.044 | 1.10 | 1,431,084 | 93.8% |

| 3 | Subword | 0.8022 | 1.744 | 4.13 | 91,858 | 19.8% |

| 4 | Word | 0.0208 🏆 | 1.015 | 1.04 | 1,574,083 | 97.9% |

| 4 | Subword | 0.6604 | 1.581 | 2.86 | 379,445 | 34.0% |

Generated Text Samples (Word-based)

Below are text samples generated from each word-based Markov chain model:

Context Size 1:

ف لمغريب فيها 5 463 462 461 كم من غير ب شبه منقّر مكررعبد المسيح فيو أداب روسيا ف لمغريب ف وقت مابين اللغات الرسمية ديال حيزب لإستقلال تا سينيما ليهاد الناس فليبيا اكتشفو أنه يتقتل ولكن بقات كتلعب فالتيران ديال هاد الريحلة معا لمونتاخاب و

Context Size 2:

واصلة ل 98 6 و عدد لفاميلات تزاد ب 81 6 و نسبة د الناس و لمحيطنسبة د الشوماج واصلة ل 21 12 نوطات مصادر ف لمغريب جّبل معروف عند الصامويين حتال ليومف لمغريب هاد دّوار كينتامي ل مشيخة سدي حمد الدغوغي لي كتضم 14 د دّواور لعاداد د

Context Size 3:

ف نسبة د التسكويل واصلة ل 91 89 و نسبة د الشوماج واصلة ل 7 6 و لخصوبةفيها مصدر و بايت زادهوم داريجابوت حيين مغاربا د لقرن 21 مغاربا مغاربا فيها مصدر و بايت زادهومو نسبة د لأمية واصلة ل 53 4 و نسبة د لأمية واصلة ل 92 5 و نسبة

Context Size 4:

نسبة نّاس اللي خدامين ف دّولة ولا لبيطاليين اللي سبق ليهوم خدمو 44 3 نسبة نّاس اللي خدامين فنّاس اللي خدامين ف لپريڤي ولا لبيطاليين اللي سبق ليهوم مصادر الدار البيضاء سطات قروية ف إقليم سطات ق...على حساب لإحصاء الرسمي د عام إحصائيات إحصائيات عامة عدد السكان ديال أورسفان نقص ب 30 7 و عدد

Generated Text Samples (Subword-based)

Below are text samples generated from each subword-based Markov chain model:

Context Size 1:

_دّرى_لجالب_لتالعاكترن_لعاميلة_ن_لت_پرومدي_و_ماتم

Context Size 2:

الرجل_بين_ماعة_لخ_لكينو_العرفوقعوهة_27_نت،_خري_د_لج

Context Size 3:

_الروس_و_هي_ماية_ك_ف_موقريب._الدفاييات_ف_البالشخصياتول

Context Size 4:

_ديالو._ميامينش_و_تديال_أسباب_الغرب_6_يال_تعرّض_للحزب_الوه

Key Findings

- Best Predictability: Context-4 (word) with 97.9% predictability

- Branching Factor: Decreases with context size (more deterministic)

- Memory Trade-off: Larger contexts require more storage (379,445 contexts)

- Recommendation: Context-3 or Context-4 for text generation

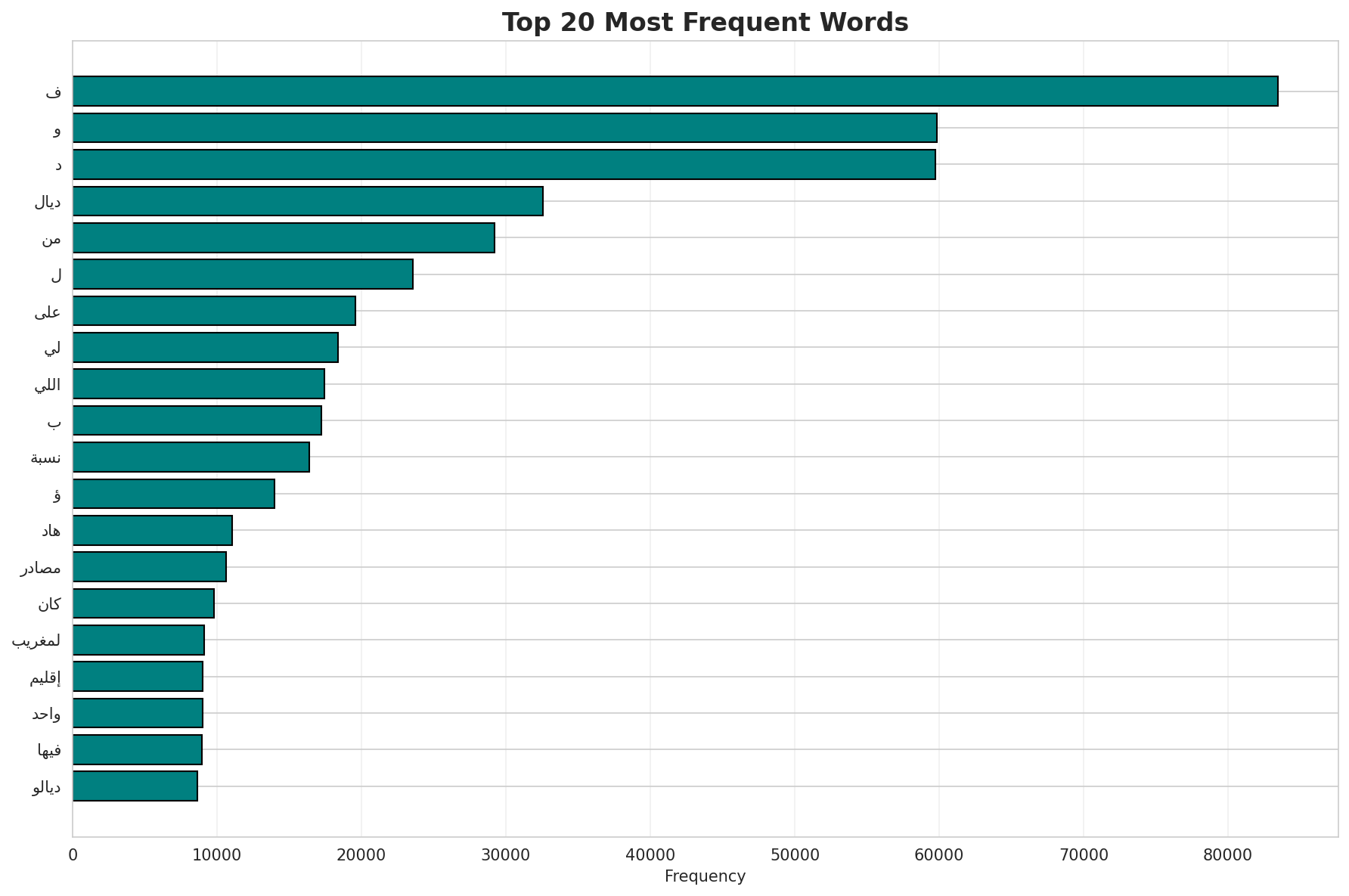

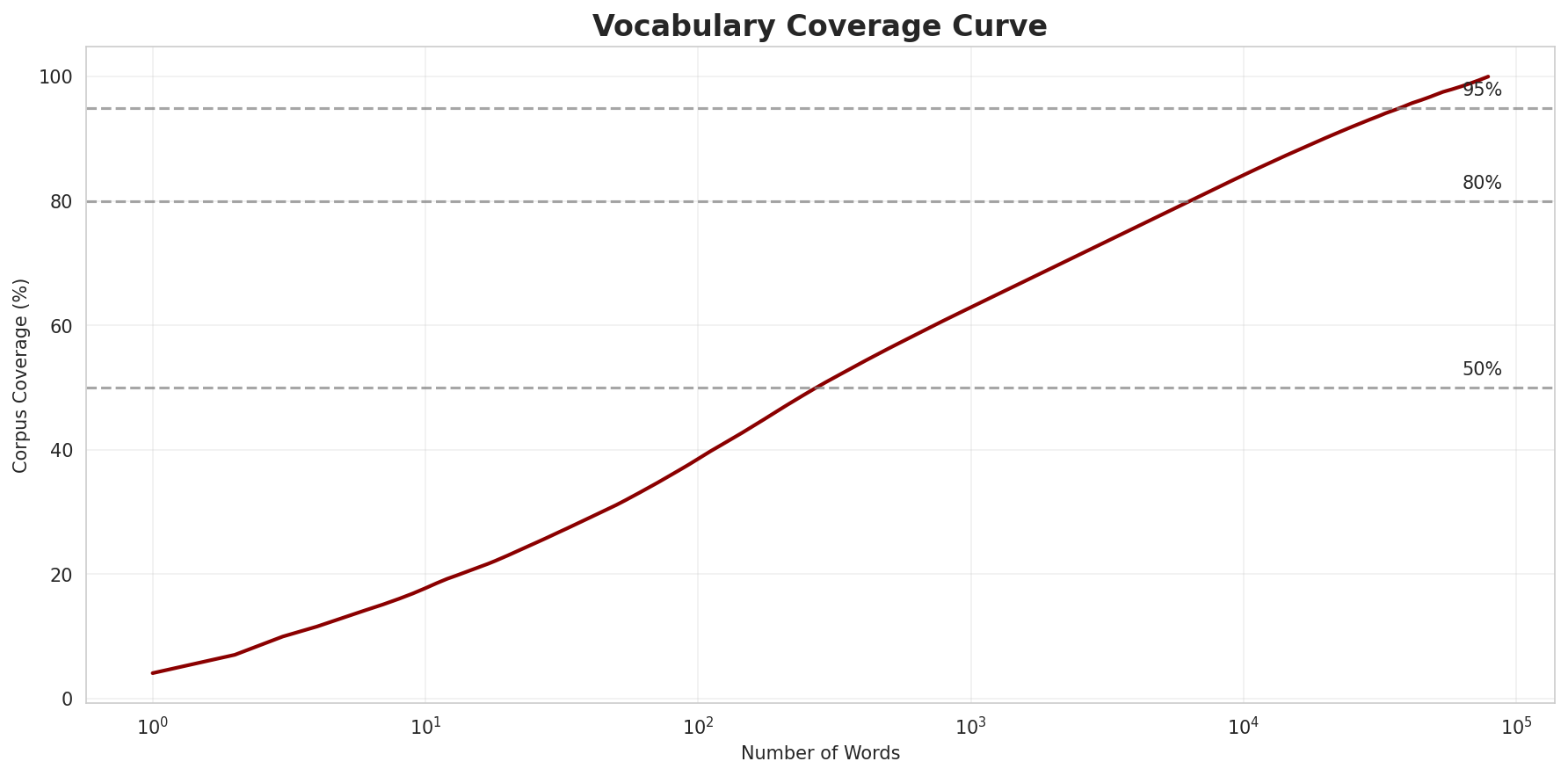

4. Vocabulary Analysis

Statistics

| Metric | Value |

|---|---|

| Vocabulary Size | 78,779 |

| Total Tokens | 2,032,841 |

| Mean Frequency | 25.80 |

| Median Frequency | 4 |

| Frequency Std Dev | 515.92 |

Most Common Words

| Rank | Word | Frequency |

|---|---|---|

| 1 | ف | 83,458 |

| 2 | و | 59,829 |

| 3 | د | 59,731 |

| 4 | ديال | 32,565 |

| 5 | من | 29,236 |

| 6 | ل | 23,572 |

| 7 | على | 19,570 |

| 8 | لي | 18,402 |

| 9 | اللي | 17,442 |

| 10 | ب | 17,233 |

Least Common Words (from vocabulary)

| Rank | Word | Frequency |

|---|---|---|

| 1 | بوفوار | 2 |

| 2 | بيتسي | 2 |

| 3 | وصانعي | 2 |

| 4 | وأهميتها | 2 |

| 5 | بورديو | 2 |

| 6 | بلومر | 2 |

| 7 | مقترحة | 2 |

| 8 | anchor | 2 |

| 9 | بعصبة | 2 |

| 10 | ماڭي | 2 |

Zipf's Law Analysis

| Metric | Value |

|---|---|

| Zipf Coefficient | 1.0213 |

| R² (Goodness of Fit) | 0.998918 |

| Adherence Quality | excellent |

Coverage Analysis

| Top N Words | Coverage |

|---|---|

| Top 100 | 38.6% |

| Top 1,000 | 62.9% |

| Top 5,000 | 77.8% |

| Top 10,000 | 84.2% |

Key Findings

- Zipf Compliance: R²=0.9989 indicates excellent adherence to Zipf's law

- High Frequency Dominance: Top 100 words cover 38.6% of corpus

- Long Tail: 68,779 words needed for remaining 15.8% coverage

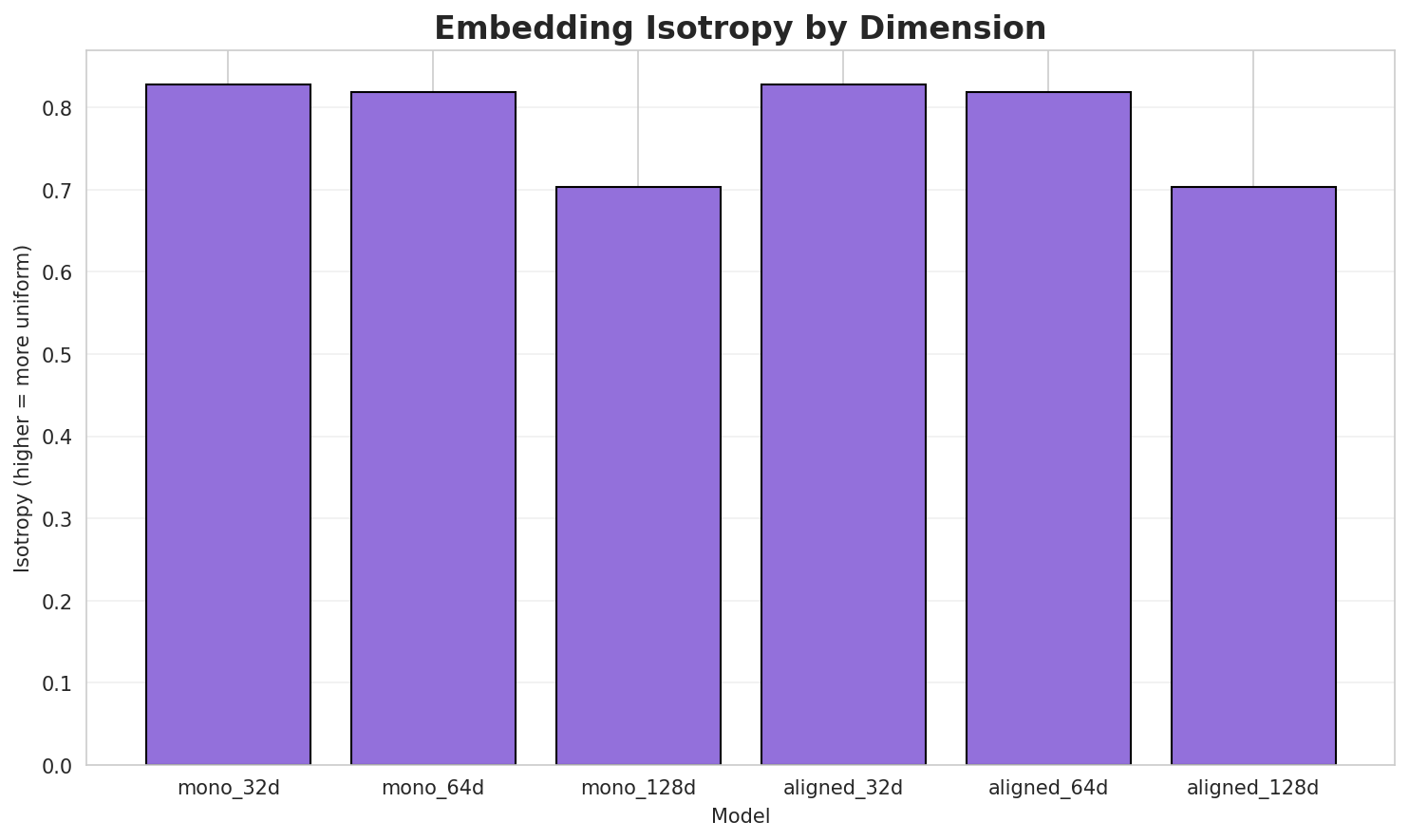

5. Word Embeddings Evaluation

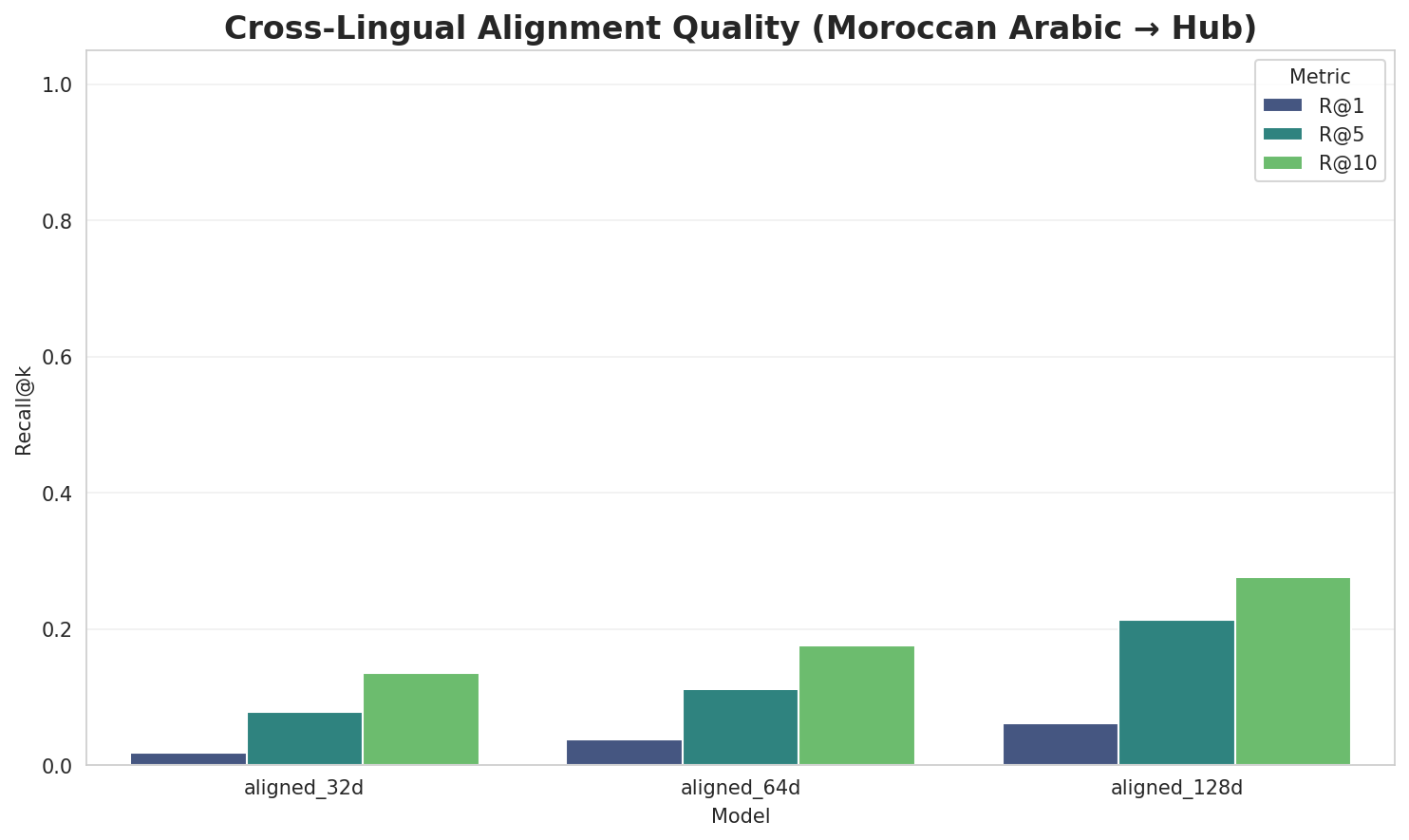

5.1 Cross-Lingual Alignment

5.2 Model Comparison

| Model | Dimension | Isotropy | Semantic Density | Alignment R@1 | Alignment R@10 |

|---|---|---|---|---|---|

| mono_32d | 32 | 0.8284 🏆 | 0.3330 | N/A | N/A |

| mono_64d | 64 | 0.8181 | 0.2588 | N/A | N/A |

| mono_128d | 128 | 0.7036 | 0.2093 | N/A | N/A |

| aligned_32d | 32 | 0.8284 | 0.3345 | 0.0180 | 0.1360 |

| aligned_64d | 64 | 0.8181 | 0.2550 | 0.0380 | 0.1760 |

| aligned_128d | 128 | 0.7036 | 0.2072 | 0.0620 | 0.2760 |

Key Findings

- Best Isotropy: mono_32d with 0.8284 (more uniform distribution)

- Semantic Density: Average pairwise similarity of 0.2663. Lower values indicate better semantic separation.

- Alignment Quality: Aligned models achieve up to 6.2% R@1 in cross-lingual retrieval.

- Recommendation: 128d aligned for best cross-lingual performance

6. Morphological Analysis (Experimental)

This section presents an automated morphological analysis derived from the statistical divergence between word-level and subword-level models. By analyzing where subword predictability spikes and where word-level coverage fails, we can infer linguistic structures without supervised data.

6.1 Productivity & Complexity

| Metric | Value | Interpretation | Recommendation |

|---|---|---|---|

| Productivity Index | 5.000 | High morphological productivity | Reliable analysis |

| Idiomaticity Gap | 1.114 | High formulaic/idiomatic content | - |

6.2 Affix Inventory (Productive Units)

These are the most productive prefixes and suffixes identified by sampling the vocabulary for global substitutability patterns. A unit is considered an affix if stripping it leaves a valid stem that appears in other contexts.

Productive Prefixes

| Prefix | Examples |

|---|---|

-ال |

الأمني, اللحظة, الفيرمات |

-لم |

لمتعصبين, لمحافض, لمونضامة |

-كا |

كاتدير, كايتحلو, كايقممو |

Productive Suffixes

| Suffix | Examples |

|---|---|

-ة |

سميّة, رقصة, اللحظة |

-ات |

سطراتيجيات, الفيرمات, لحتيفالات |

-ية |

الشرقية, اللاجنسية, ولوسطانية |

-ين |

لمتعصبين, ثنين, لمالحين |

6.3 Bound Stems (Lexical Roots)

Bound stems are high-frequency subword units that are semantically cohesive but rarely appear as standalone words. These often correspond to the 'core' of a word that requires inflection or derivation to be valid.

| Stem | Cohesion | Substitutability | Examples |

|---|---|---|---|

انية |

1.80x | 68 contexts | غانية, ثانية, سانية |

اللو |

1.74x | 61 contexts | اللوه, اللور, اللول |

الات |

1.71x | 65 contexts | تالات, حالات, صالات |

جماع |

1.90x | 38 contexts | جماعي, تجماع, إجماع |

النا |

1.63x | 63 contexts | الناي, النار, الناس |

لمغر |

1.92x | 30 contexts | لمغرب, لمغربب, للمغرب |

إحصا |

2.13x | 17 contexts | إحصاء, لإحصا, إحصائي |

مغري |

2.08x | 18 contexts | مغريب, مغرية, مغريبي |

حصاء |

2.24x | 14 contexts | إحصاء, لإحصاء, ليحصاء |

دهوم |

2.14x | 16 contexts | ضدهوم, يردهوم, زادهوم |

قليم |

2.06x | 17 contexts | فقليم, اقليم, إقليم |

لجوا |

1.77x | 26 contexts | لجواب, لجواد, الجوا |

6.4 Affix Compatibility (Co-occurrence)

This table shows which prefixes and suffixes most frequently co-occur on the same stems, revealing the 'stacking' rules of the language's morphology.

| Prefix | Suffix | Frequency | Examples |

|---|---|---|---|

-ال |

-ة |

280 words | الراكوبة, العمدة |

-ال |

-ات |

163 words | الشلالات, العبرات |

-ال |

-ية |

152 words | الزراعية, الطباشيرية |

-ال |

-ين |

76 words | الموحدين, الاثنين |

-لم |

-ة |

66 words | لمملكة, لمُحمدية |

-لم |

-ين |

45 words | لموناضيلين, لمعتقلين |

-لم |

-ات |

25 words | لمونضّامات, لممرات |

-لم |

-ية |

21 words | لمُحمدية, لمراكشية |

-كا |

-ين |

2 words | كايسين, كاتبين |

6.5 Recursive Morpheme Segmentation

Using Recursive Hierarchical Substitutability, we decompose complex words into their constituent morphemes. This approach handles nested affixes (e.g., prefix-prefix-root-suffix).

| Word | Suggested Split | Confidence | Stem |

|---|---|---|---|

| التوجيهات | ال-توجيه-ات |

6.0 | توجيه |

| الصومالية | ال-صومال-ية |

6.0 | صومال |

| الپاكستانية | ال-پاكستان-ية |

6.0 | پاكستان |

| الدوّازات | ال-دوّاز-ات |

6.0 | دوّاز |

| الصالونات | ال-صالون-ات |

6.0 | صالون |

| التعبيرية | ال-تعبير-ية |

6.0 | تعبير |

| الانقلابية | ال-انقلاب-ية |

6.0 | انقلاب |

| لمنقارضين | لم-نقارض-ين |

6.0 | نقارض |

| التقليديين | ال-تقليدي-ين |

6.0 | تقليدي |

| لمنتاشرين | لم-نتاشر-ين |

6.0 | نتاشر |

| الماكينات | ال-ماكين-ات |

6.0 | ماكين |

| البرونزية | ال-برونز-ية |

6.0 | برونز |

| التكوينية | ال-تكوين-ية |

6.0 | تكوين |

| التعليمية | ال-تعليم-ية |

6.0 | تعليم |

| التلفزيونية | ال-تلفزيون-ية |

6.0 | تلفزيون |

6.6 Linguistic Interpretation

Automated Insight: The language Moroccan Arabic shows high morphological productivity. The subword models are significantly more efficient than word models, suggesting a rich system of affixation or compounding.

Note on Idiomaticity: The high Idiomaticity Gap suggests a large number of frequent multi-word expressions or formulaic sequences that are statistically distinct from their component parts.

7. Summary & Recommendations

Production Recommendations

| Component | Recommended | Rationale |

|---|---|---|

| Tokenizer | 64k BPE | Best compression (4.17x) |

| N-gram | 2-gram | Lowest perplexity (424) |

| Markov | Context-4 | Highest predictability (97.9%) |

| Embeddings | 100d | Balanced semantic capture and isotropy |

Appendix: Metrics Glossary & Interpretation Guide

This section provides definitions, intuitions, and guidance for interpreting the metrics used throughout this report.

Tokenizer Metrics

Compression Ratio

Definition: The ratio of characters to tokens (chars/token). Measures how efficiently the tokenizer represents text.

Intuition: Higher compression means fewer tokens needed to represent the same text, reducing sequence lengths for downstream models. A 3x compression means ~3 characters per token on average.

What to seek: Higher is generally better for efficiency, but extremely high compression may indicate overly aggressive merging that loses morphological information.

Average Token Length (Fertility)

Definition: Mean number of characters per token produced by the tokenizer.

Intuition: Reflects the granularity of tokenization. Longer tokens capture more context but may struggle with rare words; shorter tokens are more flexible but increase sequence length.

What to seek: Balance between 2-5 characters for most languages. Arabic/morphologically-rich languages may benefit from slightly longer tokens.

Unknown Token Rate (OOV Rate)

Definition: Percentage of tokens that map to the unknown/UNK token, indicating words the tokenizer cannot represent.

Intuition: Lower OOV means better vocabulary coverage. High OOV indicates the tokenizer encounters many unseen character sequences.

What to seek: Below 1% is excellent; below 5% is acceptable. BPE tokenizers typically achieve very low OOV due to subword fallback.

N-gram Model Metrics

Perplexity

Definition: Measures how "surprised" the model is by test data. Mathematically: 2^(cross-entropy). Lower values indicate better prediction.

Intuition: If perplexity is 100, the model is as uncertain as if choosing uniformly among 100 options at each step. A perplexity of 10 means effectively choosing among 10 equally likely options.

What to seek: Lower is better. Perplexity decreases with larger n-grams (more context). Values vary widely by language and corpus size.

Entropy

Definition: Average information content (in bits) needed to encode the next token given the context. Related to perplexity: perplexity = 2^entropy.

Intuition: High entropy means high uncertainty/randomness; low entropy means predictable patterns. Natural language typically has entropy between 1-4 bits per character.

What to seek: Lower entropy indicates more predictable text patterns. Entropy should decrease as n-gram size increases.

Coverage (Top-K)

Definition: Percentage of corpus occurrences explained by the top K most frequent n-grams.

Intuition: High coverage with few patterns indicates repetitive/formulaic text; low coverage suggests diverse vocabulary usage.

What to seek: Depends on use case. For language modeling, moderate coverage (40-60% with top-1000) is typical for natural text.

Markov Chain Metrics

Average Entropy

Definition: Mean entropy across all contexts, measuring average uncertainty in next-word prediction.

Intuition: Lower entropy means the model is more confident about what comes next. Context-1 has high entropy (many possible next words); Context-4 has low entropy (few likely continuations).

What to seek: Decreasing entropy with larger context sizes. Very low entropy (<0.1) indicates highly deterministic transitions.

Branching Factor

Definition: Average number of unique next tokens observed for each context.

Intuition: High branching = many possible continuations (flexible but uncertain); low branching = few options (predictable but potentially repetitive).

What to seek: Branching factor should decrease with context size. Values near 1.0 indicate nearly deterministic chains.

Predictability

Definition: Derived metric: (1 - normalized_entropy) × 100%. Indicates how deterministic the model's predictions are.

Intuition: 100% predictability means the next word is always certain; 0% means completely random. Real text falls between these extremes.

What to seek: Higher predictability for text generation quality, but too high (>98%) may produce repetitive output.

Vocabulary & Zipf's Law Metrics

Zipf's Coefficient

Definition: The slope of the log-log plot of word frequency vs. rank. Zipf's law predicts this should be approximately -1.

Intuition: A coefficient near -1 indicates the corpus follows natural language patterns where a few words are very common and most words are rare.

What to seek: Values between -0.8 and -1.2 indicate healthy natural language distribution. Deviations may suggest domain-specific or artificial text.

R² (Coefficient of Determination)

Definition: Measures how well the linear fit explains the frequency-rank relationship. Ranges from 0 to 1.

Intuition: R² near 1.0 means the data closely follows Zipf's law; lower values indicate deviation from expected word frequency patterns.

What to seek: R² > 0.95 is excellent; > 0.99 indicates near-perfect Zipf adherence typical of large natural corpora.

Vocabulary Coverage

Definition: Cumulative percentage of corpus tokens accounted for by the top N words.

Intuition: Shows how concentrated word usage is. If top-100 words cover 50% of text, the corpus relies heavily on common words.

What to seek: Top-100 covering 30-50% is typical. Higher coverage indicates more repetitive text; lower suggests richer vocabulary.

Word Embedding Metrics

Isotropy

Definition: Measures how uniformly distributed vectors are in the embedding space. Computed as the ratio of minimum to maximum singular values.

Intuition: High isotropy (near 1.0) means vectors spread evenly in all directions; low isotropy means vectors cluster in certain directions, reducing expressiveness.

What to seek: Higher isotropy generally indicates better-quality embeddings. Values > 0.1 are reasonable; > 0.3 is good. Lower-dimensional embeddings tend to have higher isotropy.

Average Norm

Definition: Mean magnitude (L2 norm) of word vectors in the embedding space.

Intuition: Indicates the typical "length" of vectors. Consistent norms suggest stable training; high variance may indicate some words are undertrained.

What to seek: Relatively consistent norms across models. The absolute value matters less than consistency (low std deviation).

Cosine Similarity

Definition: Measures angular similarity between vectors, ranging from -1 (opposite) to 1 (identical direction).

Intuition: Words with similar meanings should have high cosine similarity. This is the standard metric for semantic relatedness in embeddings.

What to seek: Semantically related words should score > 0.5; unrelated words should be near 0. Synonyms often score > 0.7.

t-SNE Visualization

Definition: t-Distributed Stochastic Neighbor Embedding - a dimensionality reduction technique that preserves local structure for visualization.

Intuition: Clusters in t-SNE plots indicate groups of semantically related words. Spread indicates vocabulary diversity; tight clusters suggest semantic coherence.

What to seek: Meaningful clusters (e.g., numbers together, verbs together). Avoid over-interpreting distances - t-SNE preserves local, not global, structure.

General Interpretation Guidelines

- Compare within model families: Metrics are most meaningful when comparing models of the same type (e.g., 8k vs 64k tokenizer).

- Consider trade-offs: Better performance on one metric often comes at the cost of another (e.g., compression vs. OOV rate).

- Context matters: Optimal values depend on downstream tasks. Text generation may prioritize different metrics than classification.

- Corpus influence: All metrics are influenced by corpus characteristics. Wikipedia text differs from social media or literature.

- Language-specific patterns: Morphologically rich languages (like Arabic) may show different optimal ranges than analytic languages.

Visualizations Index

| Visualization | Description |

|---|---|

| Tokenizer Compression | Compression ratios by vocabulary size |

| Tokenizer Fertility | Average token length by vocabulary |

| Tokenizer OOV | Unknown token rates |

| Tokenizer Total Tokens | Total tokens by vocabulary |

| N-gram Perplexity | Perplexity by n-gram size |

| N-gram Entropy | Entropy by n-gram size |

| N-gram Coverage | Top pattern coverage |

| N-gram Unique | Unique n-gram counts |

| Markov Entropy | Entropy by context size |

| Markov Branching | Branching factor by context |

| Markov Contexts | Unique context counts |

| Zipf's Law | Frequency-rank distribution with fit |

| Vocab Frequency | Word frequency distribution |

| Top 20 Words | Most frequent words |

| Vocab Coverage | Cumulative coverage curve |

| Embedding Isotropy | Vector space uniformity |

| Embedding Norms | Vector magnitude distribution |

| Embedding Similarity | Word similarity heatmap |

| Nearest Neighbors | Similar words for key terms |

| t-SNE Words | 2D word embedding visualization |

| t-SNE Sentences | 2D sentence embedding visualization |

| Position Encoding | Encoding method comparison |

| Model Sizes | Storage requirements |

| Performance Dashboard | Comprehensive performance overview |

About This Project

Data Source

Models trained on wikipedia-monthly - a monthly snapshot of Wikipedia articles across 300+ languages.

Project

A project by Wikilangs - Open-source NLP models for every Wikipedia language.

Maintainer

Citation

If you use these models in your research, please cite:

@misc{wikilangs2025,

author = {Kamali, Omar},

title = {Wikilangs: Open NLP Models for Wikipedia Languages},

year = {2025},

doi = {10.5281/zenodo.18073153},

publisher = {Zenodo},

url = {https://huggingface.co/wikilangs}

institution = {Omneity Labs}

}

License

MIT License - Free for academic and commercial use.

Links

- 🌐 Website: wikilangs.org

- 🤗 Models: huggingface.co/wikilangs

- 📊 Data: wikipedia-monthly

- 👤 Author: Omar Kamali

- 🤝 Sponsor: Featherless AI

Generated by Wikilangs Models Pipeline

Report Date: 2026-01-03 16:42:17